Optimization problem

In mathematics and computer science, an optimization problem is the problem of finding the best solution from all feasible solutions. Optimization problems can be divided into two categories depending on whether the variables are continuous or discrete. An optimization problem with discrete variables is known as a combinatorial optimization problem. In a combinatorial optimization problem, we are looking for an object such as an integer, permutation or graph from a finite (or possibly countable infinite) set. Problems with continuous variables include constrained problems and multimodal problems.

Contents

Continuous optimization problem

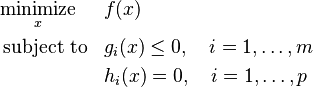

The standard form of a (continuous) optimization problem is[1]

where

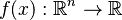

is the objective function to be minimized over the variable

is the objective function to be minimized over the variable  ,

, are called inequality constraints, and

are called inequality constraints, and are called equality constraints.

are called equality constraints.

By convention, the standard form defines a minimization problem. A maximization problem can be treated by negating the objective function.

Combinatorial optimization problem

Formally, a combinatorial optimization problem  is a quadruple

is a quadruple  , where

, where

is a set of instances;

is a set of instances;- given an instance

,

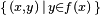

,  is the set of feasible solutions;

is the set of feasible solutions; - given an instance

and a feasible solution

and a feasible solution  of

of  ,

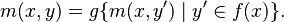

,  denotes the measure of

denotes the measure of  , which is usually a positive real.

, which is usually a positive real.  is the goal function, and is either

is the goal function, and is either  or

or  .

.

The goal is then to find for some instance  an optimal solution, that is, a feasible solution

an optimal solution, that is, a feasible solution  with

with

For each combinatorial optimization problem, there is a corresponding decision problem that asks whether there is a feasible solution for some particular measure  . For example, if there is a graph

. For example, if there is a graph  which contains vertices

which contains vertices  and

and  , an optimization problem might be "find a path from

, an optimization problem might be "find a path from  to

to  that uses the fewest edges". This problem might have an answer of, say, 4. A corresponding decision problem would be "is there a path from

that uses the fewest edges". This problem might have an answer of, say, 4. A corresponding decision problem would be "is there a path from  to

to  that uses 10 or fewer edges?" This problem can be answered with a simple 'yes' or 'no'.

that uses 10 or fewer edges?" This problem can be answered with a simple 'yes' or 'no'.

In the field of approximation algorithms, algorithms are designed to find near-optimal solutions to hard problems. The usual decision version is then an inadequate definition of the problem since it only specifies acceptable solutions. Even though we could introduce suitable decision problems, the problem is more naturally characterized as an optimization problem.[2]

NP optimization problem

An NP-optimization problem (NPO) is a combinatorial optimization problem with the following additional conditions.[3] Note that the below referred polynomials are functions of the size of the respective functions' inputs, not the size of some implicit set of input instances.

- the size of every feasible solution

is polynomially bounded in the size of the given instance

is polynomially bounded in the size of the given instance  ,

, - the languages

and

and  can be recognized in polynomial time, and

can be recognized in polynomial time, and - m is polynomial-time computable.

This implies that the corresponding decision problem is in NP. In computer science, interesting optimization problems usually have the above properties and are therefore NPO problems. A problem is additionally called a P-optimization (PO) problem, if there exists an algorithm which finds optimal solutions in polynomial time. Often, when dealing with the class NPO, one is interested in optimization problems for which the decision versions are NP-complete. Note that hardness relations are always with respect to some reduction. Due to the connection between approximation algorithms and computational optimization problems, reductions which preserve approximation in some respect are for this subject preferred than the usual Turing and Karp reductions. An example of such a reduction would be the L-reduction. For this reason, optimization problems with NP-complete decision versions are not necessarily called NPO-complete.[4]

NPO is divided into the following subclasses according to their approximability:[3]

- NPO(I): Equals FPTAS. Contains the Knapsack problem.

- NPO(II): Equals PTAS. Contains the Makespan scheduling problem.

- NPO(III): :The class of NPO problems that have polynomial-time algorithms which computes solutions with a cost at most c times the optimal cost (for minimization problems) or a cost at least

of the optimal cost (for maximization problems). In Hromkovič's book, excluded from this class are all NPO(II)-problems save if P=NP. Without the exclusion, equals APX. Contains MAX-SAT and metric TSP.

of the optimal cost (for maximization problems). In Hromkovič's book, excluded from this class are all NPO(II)-problems save if P=NP. Without the exclusion, equals APX. Contains MAX-SAT and metric TSP. - NPO(IV): :The class of NPO problems with polynomial-time algorithms approximating the optimal solution by a ratio that is polynomial in a logarithm of the size of the input. In Hromkovic's book, all NPO(III)-problems are excluded from this class unless P=NP. Contains the set cover problem.

- NPO(V): :The class of NPO problems with polynomial-time algorithms approximating the optimal solution by a ratio bounded by some function on n. In Hromkovic's book, all NPO(IV)-problems are excluded from this class unless P=NP. Contains the TSP and Max Clique problems.

Another class of interest is NPOPB, NPO with polynomially bounded cost functions. Problems with this condition have many desirable properties.

References

See also

- Mathematical optimization

- Semi-infinite programming

- Decision problem

- Search problem

- Counting problem (complexity)

- Function problem

Lua error in package.lua at line 80: module 'strict' not found.es:Optimización (matemática)