Presto at Twitter

- 1. Presto at Twitter From Alpha to Production Bill Graham - @billgraham Sailesh Mittal - @saileshmittal March 22, 2016 Facebook Presto Meetup

- 2. ● Scheduled jobs: Pig ● Ad-hoc jobs: Pig Previously at Twitter

- 3. ● Pig out ● Scalding in Then

- 4. ● Scheduled jobs: Scalding ● Ad-hoc queries for engineers: Scalding REPL ● Ad-hoc queries for non-engineers: ? ● Low-latency queries: ? Then

- 5. ● Scheduled jobs: Scalding ● Ad-hoc queries: Presto ● Low-latency queries: Presto Now

- 6. ● Qualitative comparison early 2015 ● Considered: Presto, SparkSQL, Impala, Drill, and Hive-on-Tez ● Selected Presto ○ Maturity: high ○ Customer feedback: high ○ Ease of deploy: high ○ Community: strong, open ○ Nested data: yes ○ Language: Java Evaluation

- 7. ● Cloudera ● HortonWorks ● Yahoo ● MapR ● Rocana ● Stripe ● Playtika Evaluation ● Facebook ● Dropbox ● Neilson ● TellApart ● Netflix ● JD.com Thanks to those we consulted with

- 8. ● Deployment ● Integration ● Monitoring/Alerting ● Log Collection ● Authorization ● Stability Alpha to Beta to Production

- 9. ● 192 bare-metal workers ● 76GB RAM ● 24 cores ● 2 x 1 GbE NIC Cluster

- 10. ● Publish to internal maven repo ● Python + pssh ● Brittle Deployment

- 11. ● Building a dedicated mesos cluster ○ 200 nodes ○ 128GB ram ○ 56 cores ○ 10 GbE ● One worker per container per host ● Consistent support model within Twitter Mesos/Aurora

- 13. ● Internal system called viz ● Plugin on each node ● curl JMX stats and send ● Load spiky by nature, alerts hard Monitoring & Alerting

- 15. ● Internal system called loglens ● Java LogHandler adapters ● Airlift integration challenges ● Using Python log tailing adapter Log Collection

- 16. Log Collection

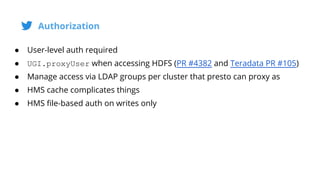

- 17. ● User-level auth required ● UGI.proxyUser when accessing HDFS (PR #4382 and Teradata PR #105) ● Manage access via LDAP groups per cluster that presto can proxy as ● HMS cache complicates things ● HMS file-based auth on writes only Authorization

- 18. ● Hadoop client memory leaks (user x query x FileSystems) ● GC Pressure on coordinator ● Implemented FileSystem cache (user x FileSystems) Authorization Challenges

- 19. java.lang.OutOfMemoryError: unable to create new native thread ● Queries failing on the coordinator ● Coordinator is thread-hungry, up to 1500 threads ● Default user process limit is 1024 $ ulimit -u 1024 ● Increase ulimit Stability #1

- 20. Encountered too many errors talking to a worker node ● Outbound network spikes hitting caps (300 Mb/s) ● Coordinator sending plan was costly (Fixed in PR #4538) ● Tuned timeouts ● Increased Tx cap Stability #2

- 21. Encountered too many errors talking to a worker node ● Timeouts still being hit ● Correlated GC pauses with errors ● Tuned GC ● Changed to G1CG collector - BAM! ● 10s of seconds -> 100s of millis Stability #3

- 23. No worker nodes available ● Happens sporadically ● Network, HTTP responses, GC all look good ● Problem: workers saturating NICs (2 Gb/sec) ● Solution #1: reduce task.max-worker-threads ● Solution #2: Larger NICs Stability #4

- 24. ● Distributed log collection ● Metrics tracking ● Measure and Tune JVM pauses ● G1 Garbage Collector ● Measure network/NIC throughput vs capacity Lessons Learned

- 25. ● MySQL connector with per-user auth ● Support for LZO/Thrift ● Improvements for Parquet nested data structures Future Work

- 26. Q&A