This document assumes basic knowledge of TRex, and assumes that TRex is installed and configured. For information, see the manual especially the material up to the Basic Usage section and stateless for better understanding the interactive model. Consider this document as an extension to the manual, it might be integrated in the future.

TRex supports Stateless (STL) and Stateful (STF) modes.

This document describes the new Advance Stateful mode (ASTF) that supports TCP layer.

The following UDP/TCP related use-cases will be addressed by ASTF mode.

-

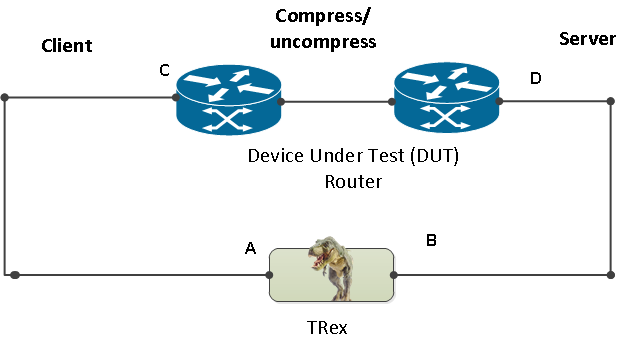

Ability to work when the DUT terminates the TCP stack (e.g. compress/uncompress, see figure 1). In this case there is a different TCP session on each side, but L7 data are almost the same.

-

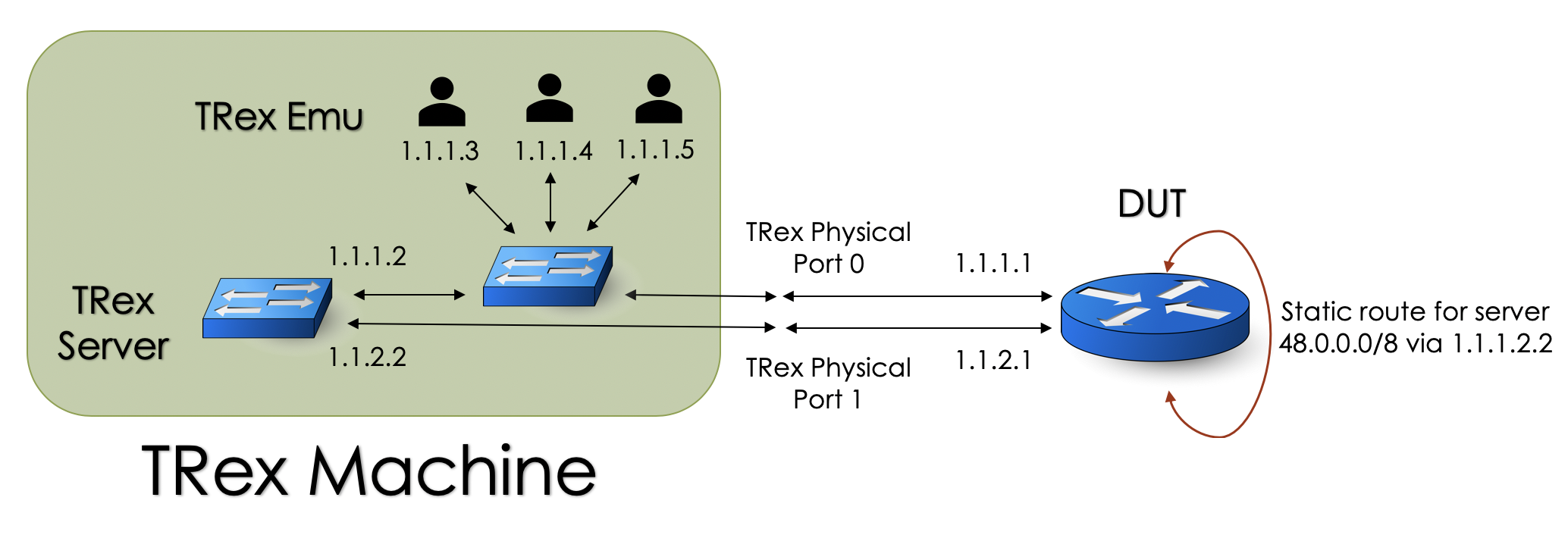

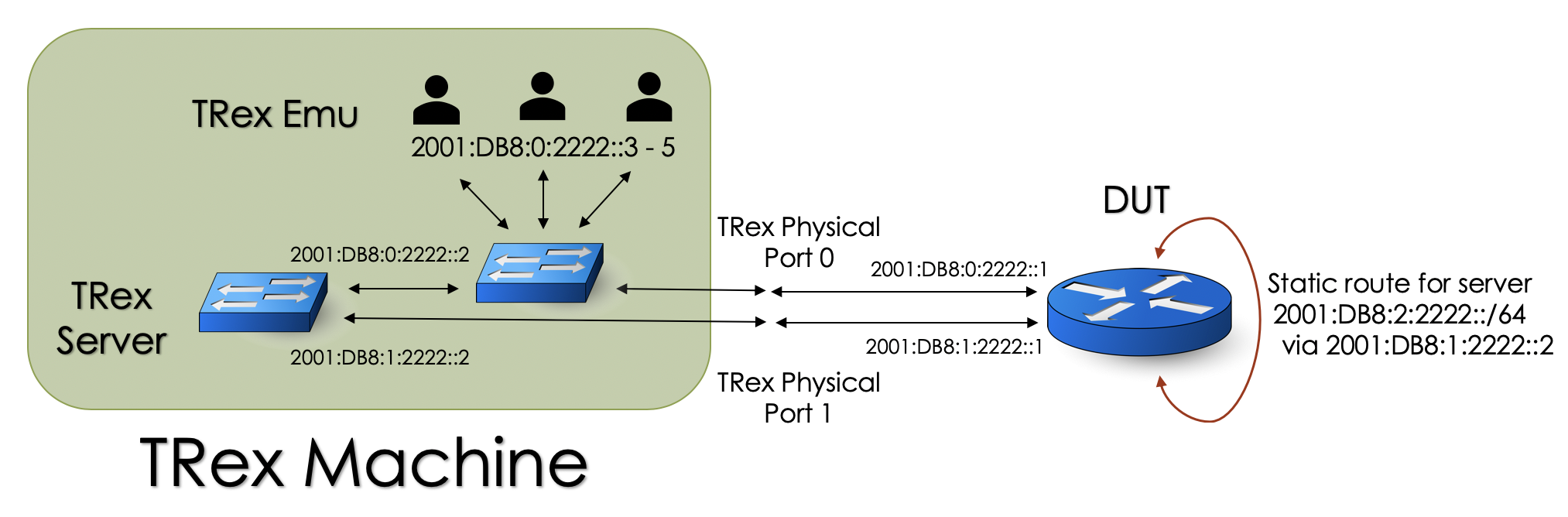

Ability to work in either client mode or server mode. This way TRex client side could be installed in one physical location on the network and TRex server in another. figure 2 shows such an example

-

Performance and scale

-

High bandwidth - ~200gb/sec with many realistic flows (not one elephant flow)

-

High connection rate - order of MCPS

-

Scale to millions of active established flows

-

-

Simulate latency/jitter/drop in high rate

-

Emulate L7 application, e.g. HTTP/HTTPS/Citrix- there is no need to implement the exact application.

-

Simulate L7 application on top of TLS using OpenSSL

-

BSD baseTCP implementation

-

Ability to change fields in the L7 stream application - for example, change HTTP User-Agent field

-

Interactive support - Fast Console, GUI

-

TCP/UDP/Application statistics (per client side/per template)

-

Verify incoming IP/TCP/UDP checksum

-

Python 2.7/3.0 Client API

-

Ability to build a realistic traffic profile that includes TCP and UDP protocols (e.g. SFR EMIX)

-

IPv6/IPv4

-

Fragmentation support

-

Accurate latency for TCP flows - SYN/SYN ACK and REQ/RES latency histogram, usec resolution

|

Warning

|

ASTF support was released, however it is under constant improvement. |

-

Profile with multi templates of TCP/UDP

-

IPv4 and IPv6

-

VLAN configuration

-

Enable client only or server only or both

-

High scale with flows/BW/PPS

-

Ability to change IPv4/IPv6 configuration like default TOS etc

-

Flexible tuple generator

-

Automation support - fast interactive support, Fast Console

-

Ability to change the TCP configuration (default MSS/buffer size/RFC enabled etc)

-

Client Cluster (same format as STF for batch, new Python format for interactive)

-

Basic L7 emulation capability e.g. Random delay, loops, variables, Spirent and IXIA like TCP traffic patterns, Elephant flows

-

Tunable profile support — give a few tunable from console to the python profile (e.g. --total-bw 10gbps)

-

More than one core per dual-ports

-

Ability to use all ports as clients or server

-

TCP statistics per template

-

TLS support

-

IPv6 traffic is assumed to be generated by TRex itself (only the 32bit LSB is taken as a key)

-

Simulation of Jitter/Latency/drop

-

Field Engine support - ability to change a field inside the stream

-

Accurate latency for TCP session. Measure sample of the flows in EF/low latency queue. Measure the SYN=SYN-ACK and REQ-RES latency histogram

-

Fragmentation is not supported

-

Advanced L7 emulation capability

-

Add to send command the ability to signal in the middle of queue size (today is always at the end)

-

Change TCP/UDP stream fields (e.g. user Agent)

-

Protocols specific commands (e.g. wait_for_http() will parse the header and wait for the size)

-

Commands for l7 dynamic counters (e.g. wait_for_http() will register dynamic counters)

-

Can we leverage one of existing DPDK TCP stacks for our need? The short answer is no.

We chose to take a BSD4.4 original code base with FreeBSD bug fixes patches and improve the scalability to address our needs (now the code has the latest freebsd logic).

More on the reasons why in the following sections, but let me just say the above TCP DPDK stacks are optimized for real client/server application/API while in most of our traffic generation use cases, most of the traffic is known ahead of time allowing us to do much better.

Let’s take a look into what are the main properties of TRex TCP module and understand what were the main challenges we tried to solve.

-

Interact with DPDK API for batching of packets

-

Multi-instance - lock free. Each thread will get its own TCP context with local counters/configuration, flow-table etc ,RSS

-

Async, Event driven - No OS API/threads needed

-

Start write buffer

-

Continue write

-

End Write

-

Read buffer /timeout

-

OnConnect/OnReset/OnClose

-

-

Accurate with respect to TCP RFCs - at least derive from BSD to be compatible - no need to reinvent the wheel

-

Enhanced tcp statistics - as a traffic generator we need to gather as many statistics as we can, for example per template tcp statistics.

-

Ability to save descriptors for better simulation of latency/jitter/drop

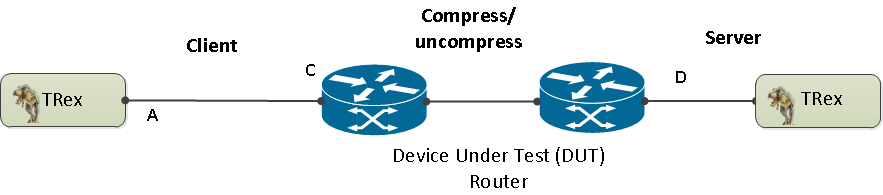

The folowing figure shows the block diagram of new TRex TCP design

And now lets proceed to our challenges, let me just repeat the objective of TRex, it is not to reach a high rate with one flow, it is to simulate a realistic network with many clients using small flows. Let’s try to see if we can solve the scale of million of flows.

Most TCP stacks have an API that allow the user to provide his buffer for write (push) and the TCP module will save them until the packets are acknowledged by the remote side. Figure 4 shows how one TX queue of one TCP flow looks like on the Tx side. This could create a scale issue in worst case. Let’s assume we need 1M active flows with 64K TX buffer (with reasonable buffer, let’s say RTT is small). The worst case buffer in this case could be 1M x 64K * mbuf-factor (let’s assume 2) = 128GB. The mbuf resource is expensive and needs to be allocated ahead of time. the solution we chose for this problem (which from a traffic generator’s point of view) is to change the API to be a poll API, meaning TCP will request the buffers from the application layer only when packets need to be sent (lazy). Now because most of the traffic is constant in our case, we could save a lot of memory and have an unlimited scale (both of flows and tx window).

|

Note

|

This optimization won’t work with TLS since constant sessions will have new data |

The same problem exists in the case of reassembly in the rx side, in worst case there is a need to store a lot of memory in reassembly queue. To fix this we can add a filter API for the application layer. Let’s assume that the application layer can request only a partial portion of the data since the rest is less important, for example data in offset of 61K-64K and only in case of restransmission (simulation). In this case we can give the application layer only the filtered data that is really important to it and still allow TCP layer to work in the same way from seq/ack perspective.

|

Note

|

This optimization won’t work with TLS since constant sessions will have new data |

There is a requirement to simulate latency/jitter/drop in the network layer. Simulating drop in high rate it is not a problem, but simulating latency/jitter in high rate is a challenge because there is a need to queue a high number of packets. See figure 6 on the left. A better solution is to queue a pointer to both the TCP flow and the TCP descriptor (with TSO information) and only when needed (i.e. when it has already left the tx queue) build the packet again (lazy). The memory footprint in this case can be reduced dramatically.

To emulate L7 application on top of the TCP layer we can define a set of simple operations. The user would be able to build an application emulation layer from Python API or by a utility that we will provide that will analyze a pcap file and convert it to TCP operations. Another thing that we can learn from pcap is the TCP parameters like MSS/Window size/Nagel/TCP options etc.. Let’s give a simple example of a L7 emulation of HTTP Client and HTTP Server:

send(request,len=100)

wait_for_response(len<=1000)

delay(random(100-1000)*usec)

send(request2,len=200)

wait_for_response(len<=2000)

close()wait_for_request(len<=100)

send_response(data,len=1000)

wait_for_request(len<=200)

send_response(data,len=2000)

close()This way both Client and Server don’t need to know the exact application protocol, they just need to have the same story/program. In real HTTP server, the server parses the HTTP requeset, learns the Content-Length field, waits for the rest of the data and finally retrieves the information from disk. With our L7 emulation there is no need. Even in cases where the data length is changed (for example NAT/LB that changes the data length) we can give some flexibility within the program on the value range of the length In case of UDP it is a message base protocols like send_msg/wait_for_msg etc.

-

Same Flexible tuple generator

-

Same Clustering mode

-

Same VLAN support

-

NAT - no need for complex learn mode. ASTF supports NAT64 out of the box.

-

Flow order. ASTF has inherent ordering verification using the TCP layer. It also checks IP/TCP/UDP checksum out of the box.

-

Latency measurement is supported in both.

-

In ASTF mode, you can’t control the IPG, less predictable (concurrent flows is less deterministic)

-

ASTF can be interactive (start, stop, stats)

GPRS Tunneling protocol is an IP/UDP based protocol used in GSM, UMTS and LTE networks, and can be decomposed into separate protocols, GTP-C, GTP-U and GTP'.

-

Currently TRex supports only GTP-U protocol.

-

In GTP-U mode TRex knows to encapsulates/decapsulates GTP-U traffic.

The client side of TRex server is responsible to the encapsulation/decapsulation functionality. -

Loopback mode - from version v2.93 TRex supports loopback mode for testing without DUT - the server side is aware to the GTPU mode and decapsulates the client packets, and learns the tunnel context. When transmitting, the server encapsulates the packets with the same tunnel context.

-

More than 2 ports support - from version v2.93 TRex supports more than 2 ports in GTPU mode.

The stateless profile can be used on ASTF mode with the following limitations.

-

TCP header packet is not supported.

-

The UDP flow in stateless profile should not be handled by ASTF. When the received UDP packet is matched to ASTF UDP flow, it will not be handled by the stateless profile.

-

HW flow statistics is not supported due to the conflits with ASTF HW RSS.

-

The performance will be less than original stateless mode.

-

TPG is not supported yet.

-

start/update/stop cannot be used for the stateless profile. Instead, start_stl/update_stl/stop_stl should be used.

| Location | Description |

|---|---|

/astf |

ASTF native (py) profiles |

/automation/trex_control_plane/interactive/trex/examples/astf |

automation examples |

/automation/trex_control_plane/interactive/trex/astf |

ASTF lib compiler (convert py to JSON), Interactive lib |

/automation/trex_control_plane/stf |

STF automation (used by ASTF mode) |

The tutorials in this section demonstrate basic TRex ASTF use cases. Examples include common and moderately advanced TRex concepts.

- Goal

-

Define the TRex physical or virtual ports and create configuration file.

Follow this chapter first time configuration

- Goal

-

Send a simple HTTP flows

- Traffic profile

-

The following profile defines one template of HTTP

- File

from trex.astf.api import *

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"],

distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"],

distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"), (1)

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[ASTFCapInfo(

file="../avl/delay_10_http_browsing_0.pcap"

cps=1)

]) (2)

def register():

return Prof1()-

Define the tuple generator range for client side and server side

-

The template list with relative CPS (connection per second)

- Running TRex with this profile interactive (v2.47 and up)

-

.Start ASTF in interactive mode

sudo ./t-rex-64 -i --astf

./trex-console -s [server-ip]trex>start -f astf/http_simple.py -m 1000 -d 1000 -l 1000

trex>tui

trex>[press] t/l for astf statistics and latency

trex>stop | client | server |

--------------------------------------------------------------------------------

m_active_flows | 39965 | 39966 | active flows

m_est_flows | 39950 | 39952 | active est flows

m_tx_bw_l7_r | 31.14 Mbps | 4.09 Gbps | tx bw

m_rx_bw_l7_r | 4.09 Gbps | 31.14 Mbps | rx bw

m_tx_pps_r |140.36 Kpps | 124.82 Kpps | tx pps

m_rx_pps_r |156.05 Kpps | 155.87 Kpps | rx pps

m_avg_size | 1.74 KB | 1.84 KB | average pkt size

- | --- | --- |

TCP | --- | --- |

- | --- | --- |

tcps_connattempt | 73936 | 0 | connections initiated

tcps_accepts | 0 | 73924 | connections accepted

tcps_connects | 73921 | 73910 | connections established

tcps_closed | 33971 | 33958 | conn. closed (includes drops)

tcps_segstimed | 213451 | 558085 | segs where we tried to get rtt

tcps_rttupdated | 213416 | 549736 | times we succeeded

tcps_delack | 344742 | 0 | delayed acks sent

tcps_sndtotal | 623780 | 558085 | total packets sent

tcps_sndpack | 73921 | 418569 | data packets sent

tcps_sndbyte | 18406329 | 2270136936 | data bytes sent

tcps_sndctrl | 73936 | 0 | control (SYN,FIN,RST) packets sent

tcps_sndacks | 475923 | 139516 | ack-only packets sent

tcps_rcvpack | 550465 | 139502 | packets received in sequence

tcps_rcvbyte | 2269941776 | 18403590 | bytes received in sequence

tcps_rcvackpack | 139495 | 549736 | rcvd ack packets

tcps_rcvackbyte | 18468679 | 2222057965 | tx bytes acked by rcvd acks

tcps_preddat | 410970 | 0 | times hdr predict ok for data pkts

tcps_rcvoopack | 0 | 0 | *out-of-order packets received #(1)

- | --- | --- |

Flow Table | --- | --- |

- | --- | --- |

redirect_rx_ok | 0 | 1 | redirect to rx OK-

Counters with asterisk prefix (*) means that there is some kind of error, see counters description for more information

- Running TRex with this profile in batch mode (planned to be deprecated)

[bash]>sudo ./t-rex-64 -f astf/http_simple.py -m 1000 -d 1000 -c 1 --astf -l 1000 -k 10

-

--astfis mandatory to enable ASTF mode -

(Optional) Use

-cto 1, in this version it is limited to 1 core for each dual interfaces -

(Optional) Use

--cfgto specify a different configuration file. The default is /etc/trex_cfg.yaml.

pressing ‘t’ while traffic is running you can see the TCP JSON counters as table

| client | server |

--------------------------------------------------------------------------------

m_active_flows | 39965 | 39966 | active flows

m_est_flows | 39950 | 39952 | active est flows

m_tx_bw_l7_r | 31.14 Mbps | 4.09 Gbps | tx bw

m_rx_bw_l7_r | 4.09 Gbps | 31.14 Mbps | rx bw

m_tx_pps_r |140.36 Kpps | 124.82 Kpps | tx pps

m_rx_pps_r |156.05 Kpps | 155.87 Kpps | rx pps

m_avg_size | 1.74 KB | 1.84 KB | average pkt size

- | --- | --- |

TCP | --- | --- |

- | --- | --- |

tcps_connattempt | 73936 | 0 | connections initiated

tcps_accepts | 0 | 73924 | connections accepted

tcps_connects | 73921 | 73910 | connections established

tcps_closed | 33971 | 33958 | conn. closed (includes drops)

tcps_segstimed | 213451 | 558085 | segs where we tried to get rtt

tcps_rttupdated | 213416 | 549736 | times we succeeded

tcps_delack | 344742 | 0 | delayed acks sent

tcps_sndtotal | 623780 | 558085 | total packets sent

tcps_sndpack | 73921 | 418569 | data packets sent

tcps_sndbyte | 18406329 | 2270136936 | data bytes sent

tcps_sndctrl | 73936 | 0 | control (SYN,FIN,RST) packets sent

tcps_sndacks | 475923 | 139516 | ack-only packets sent

tcps_rcvpack | 550465 | 139502 | packets received in sequence

tcps_rcvbyte | 2269941776 | 18403590 | bytes received in sequence

tcps_rcvackpack | 139495 | 549736 | rcvd ack packets

tcps_rcvackbyte | 18468679 | 2222057965 | tx bytes acked by rcvd acks

tcps_preddat | 410970 | 0 | times hdr predict ok for data pkts

tcps_rcvoopack | 0 | 0 | *out-of-order packets received #(1)

- | --- | --- |

Flow Table | --- | --- |

- | --- | --- |

redirect_rx_ok | 0 | 1 | redirect to rx OK-

Counters with asterisk prefix (*) means that there is some kind of error, see counters description for more information

- Discussion

-

When a template with pcap file is specified, like in this example the python code analyzes the L7 data of the pcap file and TCP configuration and build a JSON that represent

-

The client side application

-

The server side application (opposite from client)

-

TCP configuration for each side

-

template = choose_template() (1)

src_ip,dest_ip,src_port = generate from pool of client

dst_port = template.get_dest_port()

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) (2)

s.connect(dest_ip,dst_port) (3)

# program (4)

s.write(template.request) #write the following taken from the pcap file

# GET /3384 HTTP/1.1

# Host: 22.0.0.3

# Connection: Keep-Alive

# User-Agent: Mozilla/4.0

# Accept: */*

# Accept-Language: en-us

# Accept-Encoding: gzip, deflate, compress

s.read(template.request_size) # wait for 32K bytes and compare some of it

#HTTP/1.1 200 OK

#Server: Microsoft-IIS/6.0

#Content-Type: text/html

#Content-Length: 32000

# body ..

s.close();-

Tuple-generator is used to generate tuple for client and server side and choose a template

-

Flow is created

-

Connect to the server

-

Run the program base on JSON (in this example created from the pcap file)

# if this is SYN for flow that already exist, let TCP handle it

if ( flow_table.lookup(pkt) == False ) :

# first SYN in the right direction with no flow

compare (pkt.src_ip/dst_ip to the generator ranges) # check that it is in the range or valid server IP (src_ip,dest_ip)

template= lookup_template(pkt.dest_port) #get template for the dest_port

# create a socket for TCP server

s = socket.socket(socket.AF_INET, socket.SOCK_STREAM) (1)

# bind to the port

s.bind(pkt.dst_ip, pkt.dst_port)

s.listen(1)

#program of the template (2)

s.read(template.request_size) # just wait for x bytes, don't check them

# GET /3384 HTTP/1.1

# Host: 22.0.0.3

# Connection: Keep-Alive

# User-Agent: Mozilla/4.0 ..

# Accept: */*

# Accept-Language: en-us

# Accept-Encoding: gzip, deflate, compress

s.write(template.response) # just wait for x bytes,

# don't check them (TCP check the seq and checksum)

#HTTP/1.1 200 OK

#Server: Microsoft-IIS/6.0

#Content-Type: text/html

#Content-Length: 32000

# body ..

s.close()-

As you can see from the pseudo code there is no need to open all the servers ahead of time, we open and allocate socket only when packet match the criteria of server side

-

The program is the opposite of the client side.

The above is just a pseudo code that was created to explain how logically TRex works. It was simpler to show a pseudo code that runs in one thread in blocking fashion, but in practice it is run in an event driven and many flows can multiplexed in high performance and scale. The L7 program can be written using Python API (it is compiled to micro-code event driven by TRex server).

- Goal

-

Simple browsing, HTTP and HTTPS flow. In this example, each template has different destination port (80/443)

- Traffic profile

-

The profile include HTTP and HTTPS profile. Each second there would be 2 HTTPS flows and 1 HTTP flow.

- File

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"],

distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"],

distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=1), (1)

ASTFCapInfo(file="avl/delay_10_https_0.pcap",

cps=2) (2)

])

def register():

return Prof1()-

HTTP template

-

HTTPS template

- Discussion

-

The server side chooses the template base on the destination port. Because each template has a unique destination port (80/443) there is nothing to do. In the next example we will show what to do in case both templates has the same destination port. From the client side, the scheduler will schedule in each second 2 HTTPS flows and 1 HTTP flow base on the CPS

|

Note

|

In the real file cps=1 in both profiles. |

- Goal

-

Create profile with two HTTP templates. In this example, both templates have the same destination port (80)

- Traffic profile

-

The profile includes same HTTP profile only for demonstration.

- File

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"],

distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"],

distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=1), (1)

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=2,port=8080) (2)

])

def register():

return Prof1()-

HTTP template

-

HTTP template override the pcap file destination port

- Discussion

-

In the real world, the same server can handle many types of transactions on the same port based on the request. In this TRex version we have this limitation as it is only an emulation. Next, we would add a better engine that could associate the template based on server IP-port socket or by L7 data

- Goal

-

Create a profile with two sets of client/server tuple pools.

- Traffic profile

-

The profile includes the same HTTP template for demonstration.

- File

class Prof1():

def __init__(self):

pass # tunables

def create_profile(self):

ip_gen_c1 = ASTFIPGenDist(ip_range=["16.0.0.1", "16.0.0.255"],

distribution="seq") (1)

ip_gen_s1 = ASTFIPGenDist(ip_range=["48.0.0.1", "48.0.255.255"],

distribution="seq")

ip_gen1 = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c1,

dist_server=ip_gen_s1)

ip_gen_c2 = ASTFIPGenDist(ip_range=["10.0.0.1", "10.0.0.255"],

distribution="seq") (2)

ip_gen_s2 = ASTFIPGenDist(ip_range=["20.0.0.1", "20.255.255"],

distribution="seq")

ip_gen2 = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c2,

dist_server=ip_gen_s2)

profile = ASTFProfile(cap_list=[

ASTFCapInfo(file="../cap2/http_get.pcap",

ip_gen=ip_gen1), (3)

ASTFCapInfo(file="../cap2/http_get.pcap",

ip_gen=ip_gen2, port=8080) (4)

])

return profile-

Define generator range 1

-

Define generator range 2

-

Assign generator range 1 to the first template

-

Assign generator range 2 to the second template

- Discussion

-

The tuple generator ranges should not overlap.

- Goal

-

Create a profile with different offset per side for dual mask ports.

- Traffic profile

-

A modified version of http_simply profile.

- File

class Prof1():

def __init__(self):

pass

def get_profile(self, tunables, **kwargs):

parser = argparse.ArgumentParser(description='Argparser for {}'.format(os.path.basename(__file__)),

formatter_class=argparse.ArgumentDefaultsHelpFormatter)

args = parser.parse_args(tunables)

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0", ip_offset_server="5.0.0.0"), (1)

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=2.776)])-

Defines offset of "1.0.0.0" to the client side and offset of "5.0.0.0" to the server side

- Discussion

-

Offset per side is available from version v2.97 and above.

ASTF can run a profile that includes a mix of IPv4 and IPv6 template using ipv6. enables tunable. The tunable could be global (for all profile) or per template. However, for backward compatibility, a CLI flag (in start) can convert all the profile to ipv6 automatically

trex>start -f astf/http_simple.py -m 1000 -d 1000 --astf -l 1000 --ipv6[bash]>sudo ./t-rex-64 -f astf/http_simple.py -m 1000 -d 1000 -c 1 --astf -l 1000 --ipv6::x.x.x.x where LSB is IPv4 addreesThe profile includes same HTTP template for demonstration.

- File

Another way to enable IPv6 globally (or per template) is by tunables in the profile file

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

c_glob_info = ASTFGlobalInfo()

# Enable IPV6 for client side and set the default SRC/DST IPv6 MSB

# LSB will be taken from ip generator

c_glob_info.ipv6.src_msb ="ff02::" (1)

c_glob_info.ipv6.dst_msb ="ff03::" (2)

c_glob_info.ipv6.enable =1 (3)

return ASTFProfile(default_ip_gen=ip_gen,

# Defaults affects all files

default_c_glob_info=c_glob_info,

cap_list=[

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=1)

]

)-

Set default for source IPv6 addr (32bit LSB will be set by IPv4 tuple generator)

-

Set default for destination IPv6 addr (32bit LSB will be set by IPv4 tuple generator)

-

Enable ipv6 for all templates

In this case there is no need for --ipv6 in CLI

trex>start -f astf/param_ipv6.py -m 1000 -d 1000 -l 1000Profile tunable is a mechanism to tune the behavior of ASTF traffic profile. TCP layer has a set of tunables. IPv6 and IPv4 have another set of tunables.

There are two types of tunables:

-

Global tunable: per client/server will affect all the templates in specific side.

-

Per-template tunable: will affect only the associated template (per client/server). Will have higher priority relative to global tunable.

By default, the TRex server has a default value for all the tunables and only when you set a specific tunable the server will override the value. Example of a tunable is tcp.mss. You can change the tcp.mss:

-

Per all client side templates

-

Per all server side templates

-

For a specific template per client side

-

For a specific template per server side

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

c_glob_info = ASTFGlobalInfo()

c_glob_info.tcp.mss = 1400 (1)

c_glob_info.tcp.initwnd = 1

s_glob_info = ASTFGlobalInfo()

s_glob_info.tcp.mss = 1400 (2)

s_glob_info.tcp.initwnd = 1

return ASTFProfile(default_ip_gen=ip_gen,

# Defaults affects all files

default_c_glob_info=c_glob_info,

default_s_glob_info=s_glob_info,

cap_list=[

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap", cps=1)

]

)-

Set client side global tcp.mss/tcp.initwnd to 1400,1

-

Set server side global tcp.mss/tcp.initwnd to 1400,1

class Prof1():

def get_profile(self):

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

c_info = ASTFGlobalInfo()

c_info.tcp.mss = 1200

c_info.tcp.initwnd = 1

s_info = ASTFGlobalInfo()

s_info.tcp.mss = 1400

s_info.tcp.initwnd = 10

return ASTFProfile(default_ip_gen=ip_gen,

# Defaults affects all files

cap_list=[

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap", cps=1)

ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap", cps=1,

port=8080,

c_glob_info=c_info, s_glob_info=s_info), (1)

]

)-

Only the second template will get the c_info/s_info

For reference of all tunables see: here

- Goal

-

Simple automation test using Python from a local or remote machine.

- File

For this mode to work, TRex server should have started with interactive mode.

sudo ./t-rex-64 -i --astf c = ASTFClient(server = server)

c.connect() (1)

c.reset()

if not profile_path:

profile_path = os.path.join(astf_path.ASTF_PROFILES_PATH, 'http_simple.py')

c.load_profile(profile_path)

c.clear_stats()

c.start(mult = mult, duration = duration, nc = True) (2)

c.wait_on_traffic() (3)

stats = c.get_stats() (4)

# use this for debug info on all the stats

#pprint(stats)

if c.get_warnings():

print('\n\n*** test had warnings ****\n\n')

for w in c.get_warnings():

print(w)-

Connect

-

Start the traffic

-

Wait for the test to finish

-

Get all stats

- Goal

-

Simple automation test using Python from a local or remote machine

- Directories

-

Python API examples:

automation/trex_control_plane/stf/examples.

Python API library: automation/trex_control_plane/stf/trex_stl_lib.

This mode works with STF python API framework and it is deprecated.

- File

import argparse

import stf_path

from trex_stf_lib.trex_client import CTRexClient (1)

from pprint import pprint

def validate_tcp (tcp_s):

if 'err' in tcp_s :

pprint(tcp_s);

return(False);

return True;

def run_stateful_tcp_test(server):

trex_client = CTRexClient(server)

trex_client.start_trex(

c = 1, # (2)

m = 1000,

f = 'astf/http_simple.py', (3)

k=10,

d = 20,

l = 1000,

astf =True, #enable TCP (4)

nc=True

)

result = trex_client.sample_until_finish()

c = result.get_latest_dump()

pprint(c["tcp-v1"]["data"]); (5)

tcp_c= c["tcp-v1"]["data"]["client"];

if not validate_tcp(tcp_c):

return False

tcp_s= c["tcp-v1"]["data"]["server"];

if not validate_tcp(tcp_s):

return False

if __name__ == '__main__':

parser = argparse.ArgumentParser(description="tcp example")

parser.add_argument('-s', '--server',

dest='server',

help='Remote trex address',

default='127.0.0.1',

type = str)

args = parser.parse_args()

if run_stateful_tcp_test(args.server):

print("PASS");-

Imports the old trex_stf_lib

-

One DP core, could be more

-

load a astf profile

-

enable astf mode

-

check astf client server counters.

See TRex Stateful Python API for details about using the Python APIs.

{'client': {'all': {

'm_active_flows': 6662,

'm_avg_size': 1834.3,

},

'err' : { 'some error counter name' : 'description of the counter'} (1)

},

'server': { 'all': {},

'err' : {}

}

}-

err object won’t exist in case of no error

{'client': {'all': {'__last': 0,

'm_active_flows': 6662,

'm_avg_size': 1834.3,

'm_est_flows': 6657,

'm_rx_bw_l7_r': 369098181.6,

'm_rx_pps_r': 12671.8,

'm_tx_bw_l7_r': 2804666.1,

'm_tx_pps_r': 12672.2,

'redirect_rx_ok': 120548,

'tcps_closed': 326458,

'tcps_connattempt': 333120,

'tcps_connects': 333115,

'tcps_delack': 1661439,

'tcps_preddat': 1661411,

'tcps_rcvackbyte': 83275862,

'tcps_rcvackpack': 664830,

'tcps_rcvbyte': 10890112648,

'tcps_rcvpack': 2326241,

'tcps_rttupdated': 997945,

'tcps_segstimed': 997962,

'tcps_sndacks': 2324887,

'tcps_sndbyte': 82945635,

'tcps_sndctrl': 333120,

'tcps_sndpack': 333115,

'tcps_sndtotal': 2991122}},

'server': {'all': {'__last': 0,

'm_active_flows': 6663,

'm_avg_size': 1834.3,

'm_est_flows': 6657,

'm_rx_bw_l7_r': 2804662.9,

'm_rx_pps_r': 14080.0,

'm_tx_bw_l7_r': 369100825.2,

'm_tx_pps_r': 11264.0,

'redirect_rx_ok': 120549,

'tcps_accepts': 333118,

'tcps_closed': 326455,

'tcps_connects': 333112,

'tcps_rcvackbyte': 10882823775,

'tcps_rcvackpack': 2657980,

'tcps_rcvbyte': 82944888,

'tcps_rcvpack': 664836,

'tcps_rttupdated': 2657980,

'tcps_segstimed': 2659379,

'tcps_sndacks': 664842,

'tcps_sndbyte': 10890202264,

'tcps_sndpack': 1994537,

'tcps_sndtotal': 2659379}}}In case there are no errors the err object won’t be there. In case of an error counters the err section will include the counter and the description. The all section includes the good and error counters value.

- Goal

-

Use the TRex ASTF simple simulator.

The TRex package includes a simulator tool, astf-sim.

The simulator operates as a Python script that calls an executable.

The platform requirements for the simulator tool are the same as for TRex.

There is no need for super user in case of simulation.

The TRex simulator can:

Demonstrate the most basic use case using TRex simulator. In this simple simulator there is one client flow and one server flow and there is only one template (the first one). The objective of this simulator is to verify the TCP layer and application layer. In this simulator, it is possible to simulate many abnormal cases for example:

-

Drop of specific packets.

-

Change of packet information (e.g. wrong sequence numbers)

-

Man in the middle RST and redirect

-

Keepalive timers.

-

Set the round trip time

-

Convert the profile to JSON format

We didn’t expose all the capabilities of the simulator tool but you could debug the emulation layer using this tool and explore the pcap output files.

Example traffic profile:

- File

The following runs the traffic profile through the TRex simulator, and storing the output in a pcap file.

[bash]>./astf-sim -f astf/http_simple.py -o bThose are the pcap file that generated:

-

b_c.pcap client side pcap

-

b_s.pcap server side pcap

Contents of the output pcap file produced by the simulator in the previous step:

Adding --json displays the details of the JSON profile

[bash]>./astf-sim -f astf/http_simple.py --json

{

"templates": [ (1)

{

"client_template": {

"tcp_info": {

"index": 0

},

"port": 80, # dst port

"cps": 1, # rate in CPS

"program_index": 0, # index into program_list

"cluster": {},

"ip_gen": {

"global": {

"ip_offset": "1.0.0.0"

},

"dist_client": {

"index": 0 # index into ip_gen_dist_list

},

"dist_server": {

"index": 1 # index into ip_gen_dist_list

}

}

},

"server_template": {

"program_index": 1,

"tcp_info": {

"index": 0

},

"assoc": [

{

"port": 80 # Which dst port will be associated with this template

}

]

}

}

],

"tcp_info_list": [ (2)

{

"options": 0,

"port": 80,

"window": 32768

}

],

"program_list": [ (3)

{

"commands": [

{

"name": "tx",

"buf_index": 0 # index into "buf_list"

},

{

"name": "rx",

"min_bytes": 32089

}

]

},

{

"commands": [

{

"name": "rx",

"min_bytes": 244

},

{

"name": "tx",

"buf_index": 1 # index into "buf_list"

}

]

}

],

"ip_gen_dist_list": [ (4)

{

"ip_start": "16.0.0.1",

"ip_end": "16.0.0.255",

"distribution": "seq"

},

{

"ip_start": "48.0.0.1",

"ip_end": "48.0.255.255",

"distribution": "seq"

}

],

"buf_list": [ (5)

"R0VUIC8zMzg0IEhUVFAvMS4xDQpIb3",

"SFRUUC8xLjEgMjAwIE9LDQpTZXJ2ZX"

]

}-

A list of templates with the properties of each template

-

A list of indirect distinct tcp/ip options

-

A list of indirect distinct emulation programs

-

A list of indirect distinct tuple generator

-

A list of indirect distinct L7 buffers, used by emulation program (indirect) ( e.g. "buf_index": 1)

|

Note

|

We might change the JSON format in the future as this is a first version |

- Goal

-

Use the TRex ASTF advanced simulator.

It is like the simple simulator but simulates multiple templates and flows exacly like TRex server would do with one DP core.

[bash]>./astf-sim -f astf/http_simple.py --full -o b.pcap-

Use ‘--full’ to initiate the full simulation mode.

-

b.pcap output pcap file will be generated, it is the client side multiplex pcap.

-

There is no server side pcap file in this simulation because we are not simulating latency/jitter/drop in this case so the server should be the same as client side.

Another example that will run sfr profile in release mode and will show the counters

[bash]>./astf-sim -f astf/sfr.py --full -o o.pcap -d 1 -r -v- Goal

-

Build the L7 program using low level commands.

# we can send either Python bytes type as below:

http_req = b'GET /3384 HTTP/1.1\r\nHost: 22.0.0.3\r\nConnection: Keep-Alive\r\nUser-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; SV1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)\r\nAccept: */*\r\nAccept-Language: en-us\r\nAccept-Encoding: gzip, deflate, compress\r\n\r\n'

# or we can send Python string containing ascii chars, as below:

http_response = 'HTTP/1.1 200 OK\r\nServer: Microsoft-IIS/6.0\r\nContent-Type: text/html\r\nContent-Length: 32000\r\n\r\n<html><pre>**********</pre></html>'

class Prof1():

def __init__(self):

pass # tunables

def create_profile(self):

# client commands

prog_c = ASTFProgram() (1)

prog_c.send(http_req) (2)

prog_c.recv(len(http_response)) (3)

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.send(http_response)

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

tcp_params = ASTFTCPInfo(window=32768)

# template

temp_c = ASTFTCPClientTemplate(program=prog_c, tcp_info=tcp_params, ip_gen=ip_gen)

temp_s = ASTFTCPServerTemplate(program=prog_s, tcp_info=tcp_params) # using default association

template = ASTFTemplate(client_template=temp_c, server_template=temp_s)

# profile

profile = ASTFProfile(default_ip_gen=ip_gen, templates=template)

return profile

def get_profile(self):

return self.create_profile()-

Build the emulation program

-

First send http request

-

Wait for http response

We will expose in the future a capability that could take a pcap file and convert it to a Python code so you could tune it yourself.

- Goal

-

Tune a profile by the CLI arguments. For example change the response size by given args.

Every traffic profile must define the following function:

def create_profile(self,**kwargs)A profile can have any key-value pairs. Key-value pairs are called "cli-tunables" and can be used to customize the profile (**kwargs).

The profile defines which tunables can be input to customize output.

- Usage notes for defining parameters

-

-

All parameters require default values.

-

A profile must be loadable with no parameters specified.

-

Every tunable must be expressed as key-value pair with a default value.

-

-t key=val,key=valis the way to provide the key-value to the profile.

-

def create_profile (self, **kwargs):

# the size of the response size

http_res_size = kwargs.get('size',1)

# use http_res_size

http_response = http_response_template.format('*'*http_res_size)-t[bash]>sudo ./t-rex-64 -f astf/http_manual_cli_tunable.py -m 1000 -d 1000 -c 1 --astf -l 1000 -t size=1

[bash]>sudo ./t-rex-64 -f astf/http_manual_cli_tunable.py -m 1000 -d 1000 -c 1 --astf -l 1000 -t size=10000

[bash]>sudo ./t-rex-64 -f astf/http_manual_cli_tunable.py -m 1000 -d 1000 -c 1 --astf -l 1000 -t size=1000000[bash]>./astf-sim -f astf/http_manual_cli_tunable.py --json

[bash]>./astf-sim -f astf/http_manual_cli_tunable.py -t size=1000 --json

[bash]>./astf-sim -f astf/http_manual_cli_tunable.py -t size=1000 -o a.cap --fullBy default when the L7 emulation program is ended the socket is closed implicitly.

This example forces the server side to wait for close from peer (client) and only then will send FIN.

# client commands

prog_c = ASTFProgram()

prog_c.send(http_req)

prog_c.recv(len(http_response))

# implicit close

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.send(http_response)

prog_s.wait_for_peer_close(); # wait for client to close the socket the issue a closeThe packets trace should look like this:

client server

-------------

FIN

ACK

FIN

ACK

-------------See astf-program for more info

By default, when the L7 emulation program is started the sending buffer waits inside the socket.

This is seen as SYN/SYN-ACK/GET-ACK in the trace (piggyback ack in the GET requests).

To force the client side to send ACK and only then send the data use the connect() command.

client server

-------------

SYN

SYN-ACK

ACK

GET

------------- prog_c = ASTFProgram()

prog_c.connect(); ## connect

prog_c.reset(); ## send RST from client side

prog_s = ASTFProgram()

prog_s.wait_for_peer_close(); # wait for client to close the socketThis example will wait for connect and then will send RST packet to shutdown peer and current socket.

client server

-------------

SYN

SYN-ACK

ACK

RST

-------------See astf-program for more info.

prog_c = ASTFProgram()

prog_c.recv(len(http_resp))

prog_s = ASTFProgram()

prog_s.connect()

prog_s.send(http_resp)

prog_s.wait_for_peer_close(); # wait for client to close the socketIn this example the server send the request first and there should be a connect from the server side else the program won’t work.

When the server is the first to send the data (e.g. citrix,telnet) there is a need to wait for the server to accept the connection.

prog_c = ASTFProgram()

prog_c.recv(len(http_response))

prog_c.send(http_req)

prog_s = ASTFProgram()

prog_s.accept() # server waits for the connection to be established

prog_s.send(http_response)

prog_s.recv(len(http_req)) prog_c = ASTFProgram()

prog_c.send(http_req)

prog_c.recv(len(http_response))

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.delay(500000); # delay 500msec (500,000usec)

prog_s.send(http_response)This example will delay the server response by 500 msec.

prog_c = ASTFProgram()

prog_c.send(http_req)

prog_c.recv(len(http_response))

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.delay_rand(100000,500000); # delay random number betwean 100msec-500msec

prog_s.send(http_response)This example will delay the server by a random delay between 100-500 msec

See astf-program for more info.

This example will delay the client side.

In this example the client sends partial request (10 bytes), waits 100msec and then sends the rest of the request (there would be two segments for one request).

prog_c = ASTFProgram()

prog_c.send(http_req[:10])

prog_c.delay(100000); # delay 100msec

prog_c.send(http_req[10:])

prog_c.recv(len(http_response))

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.send(http_response) # client commands

prog_c = ASTFProgram()

prog_c.delay(100000); # delay 100msec

prog_c.send(http_req)

prog_c.recv(len(http_response))

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.send(http_response)In this example the client connects first, waits for 100msec and only then sends full request (there would be one segment for one request).

See astf-program for more info.

A side effect of this delay is more active-flows.

Let say we would like to send only 50 flows with very big size (4GB). Loading a 4GB buffer would be a challenge as TRex’s memory is limited. What we can do is loop inside the server side to send 1MB buffer 4096 times and then finish with termination.

prog_c = ASTFProgram()

prog_c.send(http_req)

prog_c.recv(0xffffffff)

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

prog_s.set_var("var2",4096); #(1)

prog_s.set_label("a:"); #(2)

prog_s.send(http_response_1mbyte)

prog_s.jmp_nz("var2","a:") #<3> dec var "var2". in case it is *not* zero jump a:-

Set varibale

-

Set label

-

Jump to label 4096 times

See astf-program for more info.

Usually in case of very long flows there is need to cap the number of active flows, this can be done by limit directive.

cap_list=[ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=1,limit=50)]) #(1)-

Use

limitfield to control the total flows generated.

By default send() command waits for the ACK on the last byte. To make it non-blocking, especially in case big BDP (large window is required) it is possible to work in non-blocking mode, this way to achieve full pipeline.

Have a look at astf/htttp_eflow2.py example.

def create_profile(self,size,loop,mss,win,pipe):

http_response = 'HTTP/1.1'

bsize = len(http_response)

r=self.calc_loops (bsize,loop)

# client commands

prog_c = ASTFProgram()

prog_c.send(http_req)

if r[1]==0:

prog_c.recv(r[0])

else:

prog_c.set_var("var1",r[1]);

prog_c.set_label("a:");

prog_c.recv(r[0],True)

prog_c.jmp_nz("var1","a:")

if r[2]:

prog_c.recv(bsize*r[2])

prog_s = ASTFProgram()

prog_s.recv(len(http_req))

if pipe:

prog_s.set_send_blocking (False) #(1)

prog_s.set_var("var2",loop-1);

prog_s.set_label("a:");

prog_s.send(http_response)

prog_s.jmp_nz("var2","a:")

prog_s.set_send_blocking (True) #(2)

prog_s.send(http_response)-

Set all send mode to be non-blocking from now on

-

Back to blocking mode. To make the last send blocking

See astf-program for more info.

Same as the previous example, only instead of using loop count we are using time as a measurement. In the prevous example we calculated the received bytes in advance and use the rcv command with the right bytes values. However, sending & receiving data according to time is tricky and errors/dtops might occur (see stats from runnnig example below).

Have a look at astf/htttp_eflow4.py example.

...

# client commands

prog_c = ASTFProgram()

prog_c.send(http_req)

prog_c.set_tick_var("var1") (1)

prog_c.set_label("a:")

prog_c.recv(len(http_response), clear = True)

prog_c.jmp_dp("var1", "a:", recv_time) (2)

prog_c.reset()

# server commands

prog_s = ASTFProgram()

prog_s.recv(len(http_req), clear = True)

prog_s.set_tick_var("var2") (3)

prog_s.set_label("b:")

prog_s.send(http_response)

prog_s.jmp_dp("var2", "b:", send_time) (4)

prog_s.reset()

...-

Start the clock at client side.

-

In case time passed since "var1" is less than "recv_time", jump to a:

-

Start the clock at server side.

-

In case time passed since "var2" is less than "recv_time", jump to b:

|

Note

|

reset() command is required in both sides in order to ignore some ASTF errors. |

Let’s test the script using tunables:

[bash]> sudo ./t-rex-64 --astf -f astf/http_eflow4.py -t send_time=2,recv_time=5TUI stats:

TCP | | |

- | | |

tcps_connattempt | 1 | 0 | connections initiated

tcps_accepts | 0 | 1 | connections accepted

tcps_connects | 1 | 1 | connections established

tcps_closed | 1 | 1 | conn. closed (includes drops)

tcps_segstimed | 2 | 998 | segs where we tried to get rtt

tcps_rttupdated | 2 | 998 | times we succeeded

tcps_sndtotal | 999 | 999 | total packets sent

tcps_sndpack | 1 | 997 | data packets sent

tcps_sndbyte | 249 | 1138574 | data bytes sent by application

tcps_sndbyte_ok | 249 | 1138574 | data bytes sent by tcp

tcps_sndctrl | 1 | 1 | control (SYN|FIN|RST) packets sent

tcps_sndacks | 997 | 1 | ack-only packets sent

tcps_rcvpack | 997 | 1 | packets received in sequence

tcps_rcvbyte | 1138574 | 249 | bytes received in sequence

tcps_rcvackpack | 1 | 998 | rcvd ack packets

tcps_rcvackbyte | 249 | 1138574 | tx bytes acked by rcvd acks

tcps_rcvackbyte_of | 0 | 1 | tx bytes acked by rcvd acks - overflow acked

tcps_preddat | 996 | 0 | times hdr predict ok for data pkts

tcps_drops | 1 | 1 | connections dropped

tcps_predack | 0 | 977 | times hdr predict ok for acks

- | | |

UDP | | |

- | | |

- | | |

Flow Table | | |

- | | |We got tcps_drops error on client side, and connections dropped on server side. These can be related as an artifact of synchronize commands.

Let’s see the opposite case using tunables:

[bash]> sudo ./t-rex-64 --astf -f astf/http_eflow4.py -t send_time=5,recv_time=2TUI stats:

TCP | | |

- | | |

tcps_connattempt | 1 | 0 | connections initiated

tcps_accepts | 0 | 1 | connections accepted

tcps_connects | 1 | 1 | connections established

tcps_closed | 1 | 1 | conn. closed (includes drops)

tcps_segstimed | 2 | 1001 | segs where we tried to get rtt

tcps_rttupdated | 2 | 1000 | times we succeeded

tcps_sndtotal | 1002 | 1001 | total packets sent

tcps_sndpack | 1 | 1000 | data packets sent

tcps_sndbyte | 249 | 1142000 | data bytes sent by application

tcps_sndbyte_ok | 249 | 1142000 | data bytes sent by tcp

tcps_sndctrl | 2 | 0 | control (SYN|FIN|RST) packets sent

tcps_sndacks | 999 | 1 | ack-only packets sent

tcps_rcvpack | 999 | 1 | packets received in sequence

tcps_rcvbyte | 1140858 | 249 | bytes received in sequence

tcps_rcvackpack | 1 | 1000 | rcvd ack packets

tcps_rcvackbyte | 249 | 1140858 | tx bytes acked by rcvd acks

tcps_rcvackbyte_of | 0 | 1 | tx bytes acked by rcvd acks - overflow acked

tcps_preddat | 998 | 0 | times hdr predict ok for data pkts

tcps_drops | 1 | 1 | connections dropped

tcps_predack | 0 | 979 | times hdr predict ok for acks

- | | |

UDP | | |

- | | |

- | | |

Flow Table | | |

- | | |

err_cwf | 1 | 0 | client pkt without flow

err_no_syn | 0 | 1 | server first flow packet with no SYNIn addition to the drops we got err_cwf and err_no_syn errors, These also can be related as an artifact of synchronize commands.

|

Note

|

Depending on time for send/recv commands will almost always cause errors/drops. In most cases the wanted behavior is by loop count with bytes, not time. |

In this example, there would be 5 parallel requests and wait for 5 responses. The first response could come while we are sending the first request as the Rx side and Tx side work in parallel.

pipeline=5;

# client commands

prog_c = ASTFProgram()

prog_c.send(pipeline*http_req)

prog_c.recv(pipeline*len(http_response))

prog_s = ASTFProgram()

prog_s.recv(pipeline*len(http_req))

prog_s.send(pipeline*http_response)See astf-program for more info.

This example will show an UDP example.

# client commands

prog_c = ASTFProgram(stream=False)

prog_c.send_msg(http_req) #(1)

prog_c.recv_msg(1) #(2)

prog_s = ASTFProgram(stream=False)

prog_s.recv_msg(1)

prog_s.send_msg(http_response)-

Send UDP message

-

Wait for number of packets

In case of pcap file, it will be converted to send_msg/recv_msg/delay taken from the pcap file.

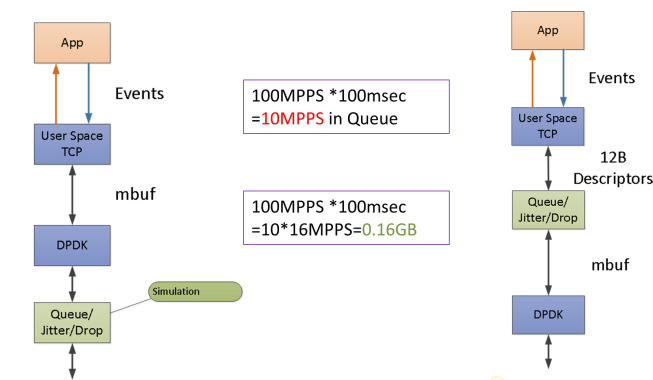

A template group is, as the name suggests, a group of templates that share statistics. Sharing statistics for templates has a number of use cases. For example, one would like to track the statistics of two different programs under the same profile. Another use case would be grouping different programs and comparing statistics between groups. Let us demonstrance a simple case, in which we run the same program with different cps in two different template groups.

def create_profile(self):

# client commands

prog_c = ASTFProgram(stream=False)

prog_c.send_msg(http_req)

prog_c.recv_msg(1)

prog_s = ASTFProgram(stream=False)

prog_s.recv_msg(1)

prog_s.send_msg(http_response)

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

# template

temp_c1 = ASTFTCPClientTemplate(port=80, program=prog_c,ip_gen=ip_gen, cps = 1)

temp_c2 = ASTFTCPClientTemplate(port=81, program=prog_c, ip_gen=ip_gen, cps = 2)

temp_s1 = ASTFTCPServerTemplate(program=prog_s, assoc=ASTFAssociationRule(80))

temp_s2 = ASTFTCPServerTemplate(program=prog_s, assoc=ASTFAssociationRule(81))

t1 = ASTFTemplate(client_template=temp_c1, server_template=temp_s1, tg_name = '1x') (1)

t2 = ASTFTemplate(client_template=temp_c2, server_template=temp_s2, tg_name = '2x')

# profile

profile = ASTFProfile(default_ip_gen=ip_gen, templates=[t1, t2])

return profile-

The group name is 1x. In this simple profile both template groups contain a single template.

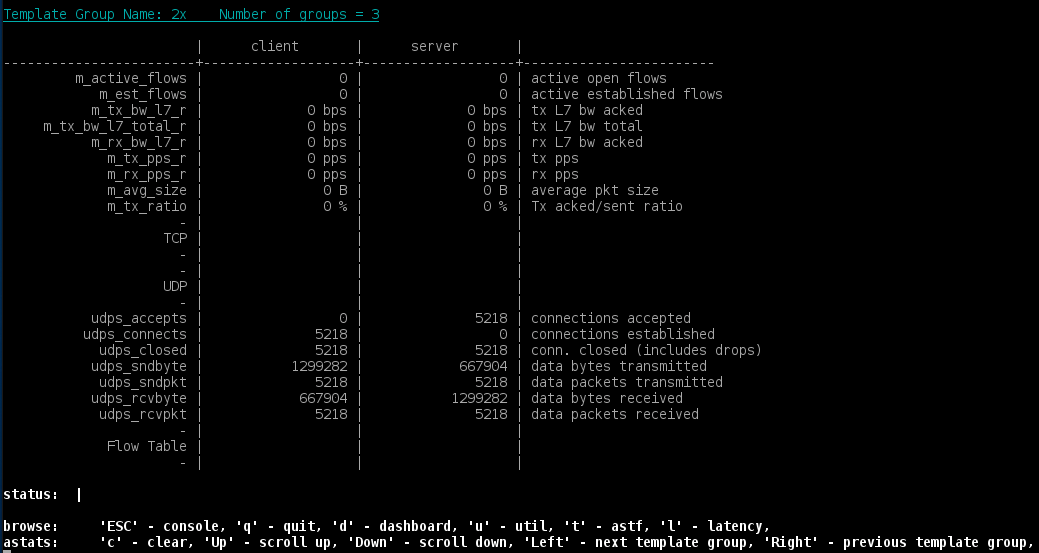

We can track the statistics using the Trex console. Within the console we can use the TUI (see previous examples) or the template group section api for live tracking of the counters. To move between template groups in TUI use the right and left arrow.

We can see that the ratio of udps_connects between the two groups converges to the ratio of cps, namely 2.

Next we explore how to use the template_group section directly from the console. For complete information, from the TRex console write:

[trex]>template_group --helpYou will see that the section has two commands, names and stats.

The names command can receive two parameters, start (default value is 0) and amount (default value is 50).

It will show in the screen the list of names [start : start+amount]. You can use it this way:

[trex]>template_group names --start 3 --amount 20Lastly, the stats command receives as parameter the name of a template group and shows the statistics of this group. For example, one can write:

[trex]>template_group stats --name 1xIf you are performing automation, the client API might come in handy (see more in TRex ASTF API). Using the API we can get the names of all the active template groups. We can also get statistics for each template group or groups. The following example clarifies the use:

self.c.load_profile(profile)

self.c.clear_stats()

self.c.start(duration = 60, mult = 100)

self.c.wait_on_traffic()

names = self.c.get_tg_names() (1)

stats = self.c.get_traffic_tg_stats(names[:2]) (2)-

Returns a list of the template group names on the profile.

-

Receives a list of template group names, and returns a dictionary of statistics per template group name.

ASTF mode can do much more than what we saw in the previous examples. We can generate payloads offline and send them to the server. These payloads can also be updated resembling the STL field engine.

|

Note

|

The actual file contains more templates than this example. |

def __init__(self):

self.cq_depth = 256

self.base_pkt_length = 42

self.payload_length = 14 + 8 + 16

self.packet_length = self.base_pkt_length + self.payload_length

self.cmpl_ofst = 14 + 8

self.cq_ofst = 14

self.cmpl_base = (1 << 14) | (1 << 15)

def create_first_payload(self, color):

cqe = "%04X%04X%08X%02X%02X%04X%04X%02X%02X" % (

0, # placeholder for completed index

0, # q_number_rss_type_flags

0, # RSS hash

self.packet_length, # bytes_written_flags

0,

0, # vlan

0, # cksum

((1 << 0) | (1 << 1) | (1 << 3) | (1 << 5)), # flags

7 | color

)

return ('z' * 14 + 'x' * 8 + base64.b16decode(cqe))

def update_payload(self, payload, cmpl_ofst, cmpl_idx, cq_ofst, cq_addr): #(1)

payload = payload[0:cmpl_ofst] + struct.pack('<H', cmpl_idx) + payload[cmpl_ofst+2:]

payload = payload[0:cq_ofst] + struct.pack('!Q', cq_addr) + payload[cq_ofst+8:]

return payload-

Updates the payload based on the previous payload and on two variables (cmpl_idx, cq_addr),

def create_template(self, sip, dip, cq_addr1, cq_addr2, color1, color2, pps):

prog_c = ASTFProgram(stream=False) #(1)

# Send the first 256 packets

cmpl_idx = self.cmpl_base

my_cq_addr = cq_addr1

payload = self.create_first_payload(color1)

for _ in range(self.cq_depth):

payload = self.update_payload(payload, self.cmpl_ofst, cmpl_idx,

self.cq_ofst, my_cq_addr) #(2)

prog_c.send_msg(payload) #(3)

prog_c.delay(1000000/pps) #(4)

cmpl_idx += 1

my_cq_addr += 16

# Send the second 256 packets

cmpl_idx = self.cmpl_base

my_cq_addr = cq_addr2

payload = self.create_first_payload(color2)

for _ in range(self.cq_depth):

payload = self.update_payload(payload, self.cmpl_ofst, cmpl_idx,

self.cq_ofst, my_cq_addr)

prog_c.send_msg(payload)

prog_c.delay(1000000/pps)

cmpl_idx += 1

my_cq_addr += 16

ip_gen_c = ASTFIPGenDist(ip_range=[sip, sip], distribution="seq") #(5)

ip_gen_s = ASTFIPGenDist(ip_range=[dip, dip], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

prog_s = ASTFProgram(stream=False)

prog_s.recv_msg(2*self.cq_depth) #(6)

temp_c = ASTFTCPClientTemplate(program=prog_c, ip_gen=ip_gen, limit=1) #(7)

temp_s = ASTFTCPServerTemplate(program=prog_s) # using default association

return ASTFTemplate(client_template=temp_c, server_template=temp_s)-

stream = False means UDP

-

Update the payload as the Field Engine in STL would.

-

Send the message to the server.

-

pps = packets per second - therefore delay is 1 sec / pps

-

IP range can be configured. In this example the IP is fixed.

-

Server expects to receive twice 256 packets.

-

limit = 1 means that the template will generate only one flow.

In the end we create a profile with two templates (could be much more).

def create_profile(self, pps):

# ip generator

source_ips = ["10.0.0.1", "33.33.33.37"]

dest_ips = ["10.0.0.3", "199.111.33.44"]

cq_addrs1 = [0x84241d000, 0x1111111111111111]

cq_addrs2 = [0x84241d000, 0x1818181818181818]

colors1 = [0x80, 0]

colors2 = [0x00, 0x80]

templates = []

for i in range(2):

templates.append(self.create_template(sip=source_ips[i], dip=dest_ips[i],

cq_addr1=cq_addrs1[i], cq_addr2=cq_addrs2[i],

color1=colors1[i], color2=colors2[i], pps=pps))

# profile

ip_gen_c = ASTFIPGenDist(ip_range=[source_ips[0], source_ips[0]], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=[dest_ips[0], dest_ips[0]], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen, templates=templates)

def get_profile(self, **kwargs):

pps = kwargs.get('pps', 1) #(1)

return self.create_profile(pps)-

pps is a tunable like the ones shown in the previous tutorials. You can add it while using the CLI with -t pps=20. In case it wasn’t specified pps=1.

In the test environment with firewalls, the number of ports can be limited. In this situation, a server port needs to be shared by multiple templates from multiple profiles.

In general, different templates should use different port numbers for their different actions.

ASTFAssociationRule() # for template 1, default port is 80

ASTFAssociationRule(port=81) # for template 2This example will show how to share a server port between templates.

- File

By different IP range

On the server port sharing, different templates can use the same port. At first, they are differentiated by IPs. ip_start and ip_end parameters can be used for this.

ASTFAssociationRule(ip_start="48.0.0.0", ip_end="48.0.0.255") # for template 1

ASTFAssociationRule(ip_start="48.0.1.0", ip_end="48.0.255.255") # for template 2By different L7 contents

If multiple templates need to use the same port and IP for their different actions, the l7_map parameter can be used. It can specify the L7 contents to distinguish the template.

prog_c1.send(b"GET /3384 HTTP/1.1....") # for template 1 -- (1)

prog_c2.send(b"POST /3384 HTTP/1.1....") # for template 2 -- (1)

ASTFAssociationRule(port=80, ip_start="48.0.0.0", ip_end="48.0.0.255",

l7_map={"offset":[0,1,2,3], # -- (2)

"mask":[255,255,255,255] # -- (3)

} # -- (4)

)-

The client program should send diffent L7 contents in the specified

"offset". The value is"GET "for template 1 and"POST"for template 2. -

mandatory, increment offsets is available up to 8.

-

optional, default is

255for each offset value. The value at each offset can be masked.value = <value at offset> & mask -

For short,

l7_map=[0,1,2,3].

In addition, all the TCP tuneable should be the same for server port sharing. The TCP protocol action needs to be the same until the first TCP payload delivered and a template is identified.

For UDP, it is recommended that all the UDP payloads have the same contents at the specified "offset". Packet loss should be considered for UDP.

Server Mode

If you want to use it for the server mode, "value" should be used. The sizes of "offset" and "value" should be the same.

ASTFAssociationRule(l7_map={"offset":[0,1,2,3], "value":[71,69,84,32]}) # port=80, "GET "

ASTFAssociationRule(l7_map={"offset":[0,1,2,3], "value":[80,79,83,84]}) # port=80, "POST"Mixed usage by IP range and L7 contents

You can use the IP range and L7 contents for the same port. At first, the L7 contents matching will be performed. If there is no matching, then the IP address will be matched.

ASTFAssociationRule(port=80, ip_start="48.0.0.0", ip_end="48.0.0.255") # for template 1

ASTFAssociationRule(port=80, ip_start="48.0.0.0", ip_end="48.0.0.255",

l7_map=[0,1,2,3]) # for template 2Until version v2.97, in case the received packets are not destined for TRex server, TRex response depends on the tunable tcp.blackhole value.

The values of tcp.blackhole are:

-

tcp.blackhole = 0 - TRex returns RST packets always

-

tcp.blackhole = 1 - TRex returns RST packets only if the received packets are SYN packets.

-

tcp.blackhole = 2 - TRex doesn’t return RST packets.

see: Tunables

The response depends only on the tcp.blackhole without any consideration of the MAC, and IPv4 addresses.

We would like TRex response in case of error would be also depends on the MAC, and IPv4 addresses.

So for that, from version v2.97, we can choose list of MAC, and IPv4 addresses that TRex server ignores.

Namely, in case the received packets are not destined for TRex server, TRex response with RST packets depends on two additional values:

-

Source MAC address - don’t generate RST packets in case the MAC address is in the black list.

-

Source IPv4 address - don’t generate RST packets in case the IPv4 address is in the black list.

Now we will see two identical examples of how to use the black list, using TRex console and ASTF automation API.

Setting ignored MAC and IPv4 addresses locally on python client

trex> black_list set --macs 24:8a:07:a2:f3:80 25:9a:08:a1:f3:40 --ipv4 16.0.0.0 17.0.0.0 17.0.0.1-

For uploading the addresses to TRex server use --upload flag

Shows the local ignored MAC and IPv4 addresses

trex> black_list show

Macs black list (Not sync with server)

Mac_start | Mac_end | Is-Sync

------------------+-------------------+--------

24:8a:07:a2:f3:80 | 24:8a:07:a2:f3:80 | False

25:9a:08:a1:f3:40 | 25:9a:08:a1:f3:40 | False

IPs black list (Not sync with server)

IP_start | IP_end | Is-Sync

---------+----------+--------

16.0.0.0 | 16.0.0.0 | False

17.0.0.0 | 17.0.0.1 | False-

The Is-Sync values are False since we haven’t push our list to TRex server yet.

Upload the local addresses to TRex server

trex> black_list uploadThe local ignored MAC and IPv4 addresses after the upload

trex> black_list show

Macs black list (Sync with server)

Mac_start | Mac_end | Is-Sync

------------------+-------------------+--------

24:8a:07:a2:f3:80 | 24:8a:07:a2:f3:80 | True

25:9a:08:a1:f3:40 | 25:9a:08:a1:f3:40 | True

IPs black list (Sync with server)

IP_start | IP_end | Is-Sync

---------+----------+--------

16.0.0.0 | 16.0.0.0 | True

17.0.0.0 | 17.0.0.1 | True-

Now the Is-Sync values are True.

Clears the local’s black_list and the server’s black_list - using the --upload flag

trex> black_list clear --uploadFor more commands

trex> black_list --help

usage: black_list [-h] ...

Black_list of MAC and IPv4 addresses related commands

optional arguments:

-h, --help show this help message and exit

commands:

set Set black list of MAC and IPv4 addresses

get Override the local list of black list with the server list

remove Remove MAC and IPv4 addresses from the black list

upload Upload to the server the current black list

clear Clear the current black list

show Show the current black listclient = ASTFClient("127.0.0.1")

client.connect()

ip_list = ["16.0.0.0", "17.0.0.0", "17.0.0.1"]

mac_list = ["24:8a:07:a2:f3:80", "25:9a:08:a1:f3:40"]

# setting the ignored MAC and IPv4 address

client.set_ignored_macs_ips(ip_list=ip_list, mac_list=mac_list, is_str=True)

black_list_dict = client.get_ignored_macs_ips(to_str=True)

....

# clearing the black list

client.set_ignored_macs_ips(ip_list=[], mac_list=[])Please make yourself familiar with the GTPU protocol before proceeding.

- Goal

-

Activating And Deactivating GTPU mode.

The activation of the GTPU mode was changed in version v2.93, so in this tutorial we will see how we can activate GTPU mode in both cases, and how to deactivate it in versions v2.93 and above.

Before v2.93 GTPU mode can be activated using the --gtpu flag:

sudo ./t-rex-64 -i --astf --software --tso-disable --gtpu 0From version v2.93 GTPU mode is being activated/deactivated via RPC command.

Now we will see how to activate/deactivate GTPU mode using TRex console and ASTF automation API.

sudo ./t-rex-64 -i --astf --software --tso-disable-

Activating GTPU mode:

trex> tunnel --type gtpu-

Deactivating GTPU mode:

trex> tunnel --type gtpu --offclient = ASTFClient("127.0.0.1")

client.connect()

# gtpu type is 1

client.activate_tunnel(tunnel_type=1, activate=True) (1)

...

...

client.activate_tunnel(tunnel_type=1, activate=False) (2)-

Activating GTPU mode

-

Deactivating GTPU mode

See: tunnel_type

- Goal

-

Activating/Deactivating GTPU Loopback mode using TRex console and ASTF automation API

sudo ./t-rex-64 -i --astf --software --tso-disable-

Activating GTPU Loopback mode:

trex> tunnel --type gtpu --loopback-

Deactivating GTPU Loopback mode:

trex> tunnel --type gtpu --offclient = ASTFClient("127.0.0.1")

client.connect()

# gtpu type is 1

client.activate_tunnel(tunnel_type=1, activate=True, loopback=True) (1)

...

...

client.activate_tunnel(tunnel_type=1, activate=False) (2)-

Activating GTPU mode with loopback mode

-

Deactivating GTPU mode

See: tunnel_type

Tutorial: Generating GTPU traffic

- Goal

-

Generating GTPU traffic with IPv4/IPv6 header using TRex console and ASTF automation API

- Traffic profile

-

The following profile defines one template of HTTP

- File

from trex.astf.api import *

import argparse

class Prof1():

def __init__(self):

pass

def get_profile(self, tunables, **kwargs):

parser = argparse.ArgumentParser(description='Argparser for {}'.format(os.path.basename(__file__)),

formatter_class=argparse.ArgumentDefaultsHelpFormatter)

args = parser.parse_args(tunables)

# ip generator

ip_gen_c = ASTFIPGenDist(ip_range=["16.0.0.0", "16.0.0.255"], distribution="seq")

ip_gen_s = ASTFIPGenDist(ip_range=["48.0.0.0", "48.0.255.255"], distribution="seq")

ip_gen = ASTFIPGen(glob=ASTFIPGenGlobal(ip_offset="1.0.0.0"),

dist_client=ip_gen_c,

dist_server=ip_gen_s)

return ASTFProfile(default_ip_gen=ip_gen,

cap_list=[ASTFCapInfo(file="../avl/delay_10_http_browsing_0.pcap",

cps=2.776)])

def register():

return Prof1()In order to generate GTPU traffic we have to assign each client with GTPU context.

There are 2 ways for achieving this:

-

Using update_tunnel_client_record API.

-

Using tunnel topology - from version v2.97

We will start with the update_tunnel_client_record API examples, and then move to tunnel_topology, which is newer and more flexible.

update_tunnel_client_record API:

As we mention earlier, in order to generate GTPU traffic we have to assign each client with GTPU context.

One of the ways for achieving this is by using update_tunnel_client_record API.

Ths first step of using this API is to enable the GTPU mode and start traffic with some profile.

After we have successfully completed the first step we need to update the GTPU context of each client.

Each client tunnel record consist of:

-

Source ip of the outer IP header

-

Destination ip of the outer IP header

-

Source port of outer UDP header - from version v2.93

-

TEID of GTPU header - field used to multiplex different connections in the same GTP tunnel.

-

IP version of the outer IP header

Every valid IP address can be used for the tunnel source and destination IP.

Now we will see two identical examples of how to use the update_tunnel_client_record API, with IPv4 outer header and with IPv6 outer header.

In the first example we will use the TRex console and in the second one will use the ASTF automation API.

In these examples:

-

We will show how to update the tunnel context of the clients in the range 16.0.0.0 - 16.0.0.255:

-

The teid will be incremented by 1 for each client in the range. The first one will receive teid 0 and second tied 1 and so on.

-

The source port and the IP address will be the same for all of the clients in the range.

-

The IPv6 outer header will be set by using src and dst addresses in IPv6 format. And by using the flag --ipv6 in TRex console or by changing the ip_version variable to 6 in ASTF python automation.

Updating the clients GTPU tunnel with IPv4 outer header:

trex> update_tunnel --c_start 16.0.0.0 --c_end 16.0.0.255 --teid 0 --src_ip 1.1.1.1 --dst_ip 11.0.0.1 --sport 5000 --type gtpuFor IPv6 outer header:

-

Change the source IP and the destination IP to IPv6 addresses

-

Add the flag --ipv6

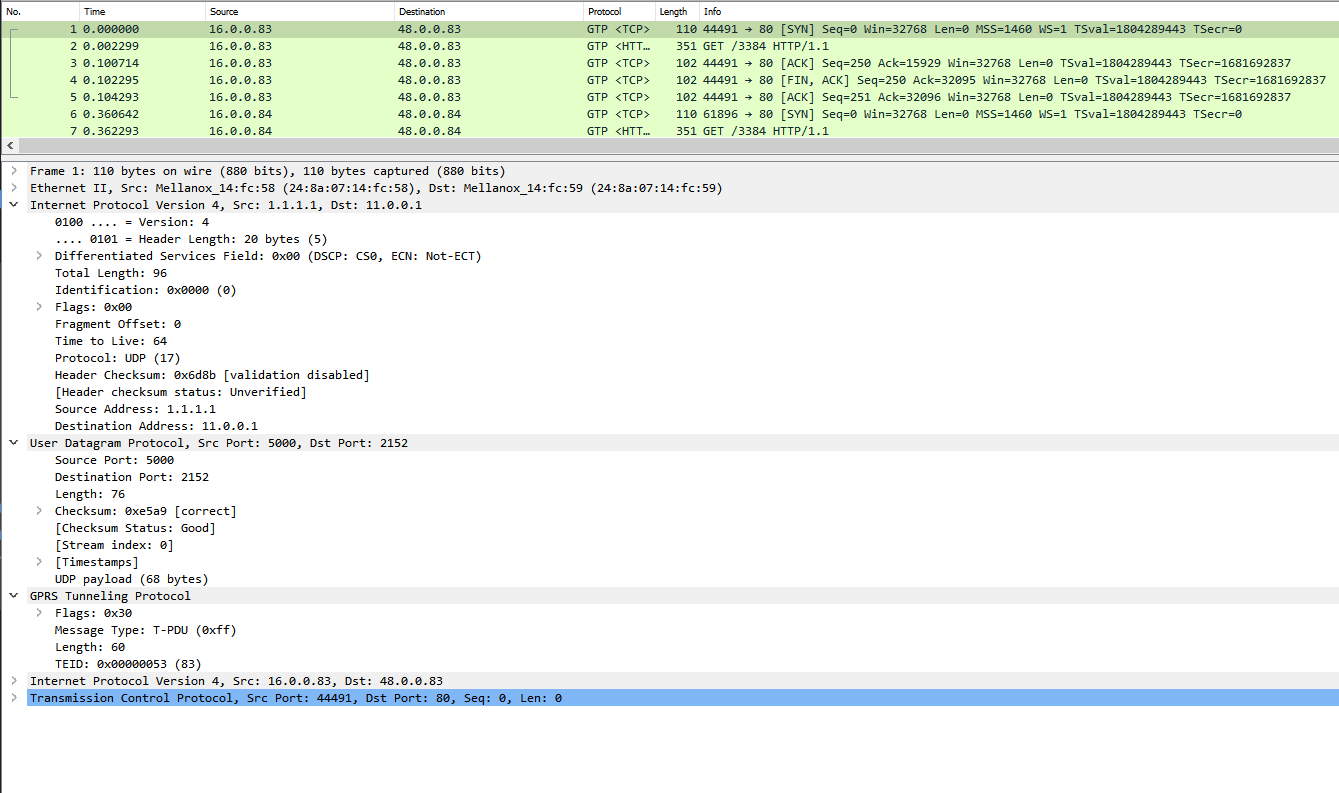

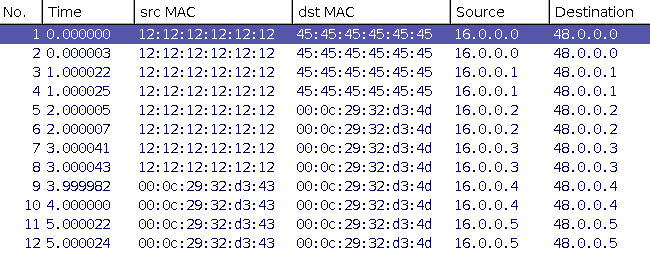

Capturing of the traffic after the update command with IPv4:

Here we can see that indeed GTPU header was added.

(Wireshark shows us the inner IP addresses)

And here is an example of the outer packet from Wireshark.

We can see that the outer IP addresses and the outer source port are the one we set using the update_tunnel_client_record API

-

In these two images we can see that the teid is incrementing between the clients.

Updating the clients GTPU tunnel with IPv4 outer header:

ip_prefix = "16.0.0."

sip = "1.1.1.1" (1)

dip = "11.0.0.1" (2)

sport = 5000

teid = 0

client_db = dict()

ip_version = 4 (3)

#gtpu type is 1

tunnel_type = 1

num_of_client = 256

while teid < num_of_client:

c_ip = ip_prefix + str(teid)

c_ip = ip_to_uint32(c_ip)

client_db[c_ip] = Tunnel(sip, dip, sport, teid, ip_version)

teid += 1

astf_client.update_tunnel_client_record(client_db, tunnel_type)As you can see we are looping over the clients: 16.0.0.0 - 16.0.0.255 and for each client we are building the same record we used in the console example.

For IPv6 outer header:

-

Change the source IP to IPv6 address

-

Change the destination IP to IPv6 address

-

Change the version to 6

Now we will see the second way for assigning GTPU context for each client.

Tunnels Topology:

Until version v2.97 in order to assign the tunnel context of each client, we had to

start traffic with some profile, and then use the update_tunnel API to update the tunnel context of each client.

From version v2.97 we can use python profiles of tunnels topology to set the clients tunnel context before starting the traffic.

This topology consists of an array of tunnel context.

Each element in the array holds the tunnel context of groups of clients and consist of:

-

src_start - The first client IP address in the group

-

src_end - The last client IP address in the group

-

initial_teid - The teid of the first client in the group

-

teid_jump - The difference between the teids of two consecutive clients ips in the group

-

sport - The tunnel source port

-

version - The tunnel IP header version

-

type - The Tunnel type (for example 1 for gtpu) see: See: tunnel_type

-

src_ip - The tunnel source IP

-

dst_ip - The tunnel destination IP

-

activate - Boolean whether to activate/deactivate the client by default.

from trex.astf.api import *

def get_topo():

topo = TunnelsTopo()

topo.add_tunnel_ctx(

src_start = '16.0.0.0',

src_end = '16.0.0.255',

initial_teid = 0,

teid_jump = 1,

sport = 5000,

version = 4,

type = 1,

src_ip = '1.1.1.11',

dst_ip = '12.2.2.2',

activate = True

)