Project Continuous Control: In this project, we have to train an agent (a double-jointed arm) to keep track of a moving target. The environment is Reacher environment provided by Unity Machine learning Agents.

NOTE:

- This project was completed in the Udacity Workspace, but the project can also be completed on a local Machine. Instructions on how to download and setup Unity ML environments can be found in Unity ML-Agents Github repo.

The state space has 33 dimensions each of which is a continuous variable. It includes position, rotation, velocity, and angular velocities of the agent.

The action space conmprises of action vectors each havinf 4 dimensions, corresponding to torque applicable to two joints. Every entry in the action vector should be a number in the interval [-1, 1].

A reward of +0.1 is provided for each step that agent's hand is in the goal location. The goal of the agent is to maintain contact with the target location for as many time steps as possible.

For this project, 2 environments are provided:

- The first version contains a single agent

- The second version contains 20 identical agents, each with its own copy of the environment. This version is particularly useful for algorithms like PPO, A3C, and D4PG that use multiple (non-interacting parallel) copies of the same agent to distribute the task of gathering experience.

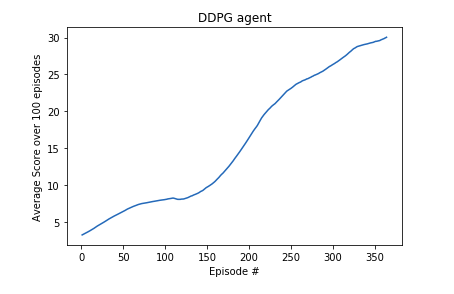

- For the first version: The task is episodic, and in order to solve the environment, the agent must get an average score of

+30over 100 consecutive episodes. - For the second version: Since there are more than 1 agents, we must achieve an average score of

+30(over 100 consecutive episodes, and over all agents).

- Download the environment from one of the links below. You need to only select the environment that matches your operating sytem:

-

Version 1: One (1) Agent

- Linux: click here

- Max OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

-

Version 2: Twenty (20) Agents

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

-

(For Windows users) Check out this link if you need help with determining if your computer is running a 32-bit version or 64-bit version of the Windows operating system.

(For AWS) If you'd like to train the agent on AWS (and have not enabled a virtual screen), the please use this link to obtain the environment.

- Python 3.6

- Pytorch

- Unity ML-Agents

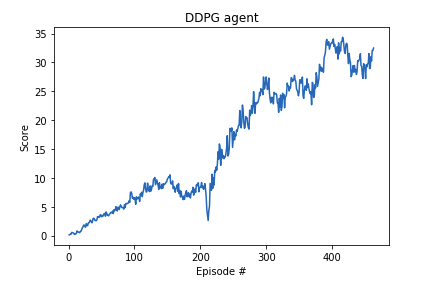

I employed this DDPG implementation provided by Udacity. Since, the enviroment contains 20 agents working in parallel, I had to make some amendments to this implementation.

- As suggested in the Benchmark implementation (Attempt #4), the agents learnt from the experience tuples every 20 timesteps and at very update step, the agents learnt 10 times.

- Also, gradient clipping as suggested in Attempt #3 helped improved the training.

self.critic_optimizer.zero_grad()

critic_loss.backward()

torch.nn.utils.clip_grad_norm(self.critic_local.parameters(), 1)

self.critic_optimizer.step()

- Also, to add a bit of exploration while choosing actions, as suggested in the DDPG paper, Ornstein-Uhlenbeck process was used to add noise to the chosen actions.

- Also, performed manual search for the best values of training and model parameters.

- After installing all dependcies, clone this repository in your local system.

- Make sure you have Jupyter installed. To install Jupyter:

python3 -m pip install --upgrade pip

python3 -m pip install jupyter

- Code structure:

Continuous_Control.ipynb: Main notebook containing the training functionddpg.py: code for DDPG agentmodel.py: code for Actor and Critic networksworkspace_utils.py: code to keep the Udacity workspace awake during training

The implementation was able to solve the environment in approximately 360 episodes.